By Vera Burgos

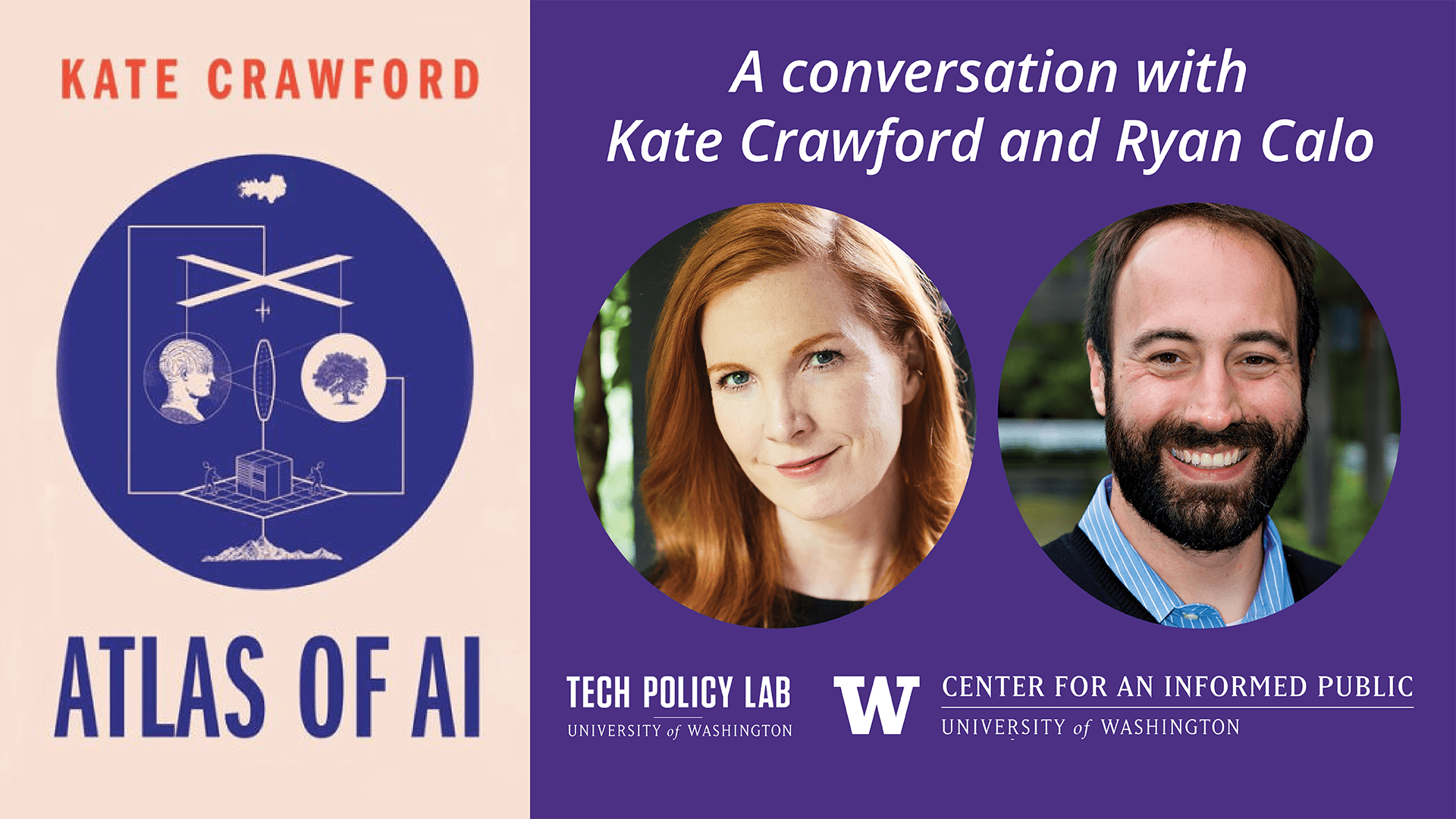

On May 13, the University of Washington’s Center for an Informed Public and Tech Policy Lab co-hosted a virtual book talk featuring Kate Crawford, a leading scholar studying the social implications of artificial intelligence (AI) and author of the recently published book Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence (Yale University Press, April 2021).

During the livestreamed discussion, moderated by UW School of Law professor Ryan Calo, Crawford, a research professor at the University of Southern California’s Annenberg School for Communication and Journalism, raised concerns about the largely invisible material and environmental impacts of AI ― in many cases drawing from limited mineral resources, like lithium and cobalt. “We can no longer ignore the actual origins of these systems and their costs,” Crawford said, referencing her experience visiting server farms and mines ― aiming to ground artificial intelligence, see its materiality, and understand its long-term impact.

As Calo, a CIP co-founder and Tech Policy Lab faculty co-director, pointed out during the discussion, the dialogue around AI continues to evolve. “…[W]e can we move to conversations about manipulating consumers, about misinformation being amplified by algorithms.” Crawford and a few others have “really helped us see some of the ways in which these systems are also extractive, … literally extracting minerals and having an environmental impact, but also extracting other things, like data, and labor, and so on,” Calo continued.

Crawford dissected the concept of “enchanted determinism” which traces descriptions of AI systems often portrayed as enchanted superhuman intelligence, but at the same time deterministic ― a combination that portrays AI as accurate and predictive with features of making people feel unable to intervene or understand. “We’ve lost the thread of how to regulate these systems well and I think features like that are a part of the reason,” Crawford said.

AI relies on classification systems, and is trained on data, “to recognize the world, and to ascribe either characteristics or identity to objects, to people, and things,” Crawford explained. “The way that the systems are being used as we categorize people according to things like skin tone … this connects to both kind of histories around physiognomy and phrenology, but also in terms of … racialized gender classifications that are done by centralized systems,” she added.

These classification systems even extend to emotional recognition.

“Psychologists and scholars for years have said that it’s not as simple as that, this one-to-one translation between facial movements and interior states doesn’t exist,” Crawford said about her research, which led her “through thinking about the points that drive systems like this to be constructed.”

Moreover, Crawford said, since many of these systems have been heavily funded by the military “we start to see after September 11 the desire for the States to have that ability to see into your interiority, to see if you’re a potential terrorist for example. And these systems continue to fail.”

Crawford noted that in many cases, these classification systems come from private companies, protected by laws that allow them to keep their inner workings confidential. “It’s proprietary, and yet has effects on populations of billions of people now. We’re not seeing how those centralizing functions are working,” Crawford said. “That’s why there’s an urgency here now, and why I think we’re seeing such a shift of many scholars now raising the alarm.”

Rather than concentrating on ethics around these classification systems, Crawford suggested shifting the focus to power distributions. “These sorts of forms of centralizing power and centralizing the ability to classify, to score, and to determine opportunities for other people… Doing a power analysis, we can go back to half of the 20th century philosophers who were already dealing with this,” Crawford said. “How do we get the field to start thinking about when a system is being constructed? Does it in fact just empower the already powerful, or does it find new ways of changing those asymmetries?”

Calo agreed, adding: “You have to show where power is, you have to locate it, map it ― because otherwise you cannot have that conversation about whether the balance is appropriate.”